Atmospheric Sound Lamp is a group project alongside Gabriel Andrade.

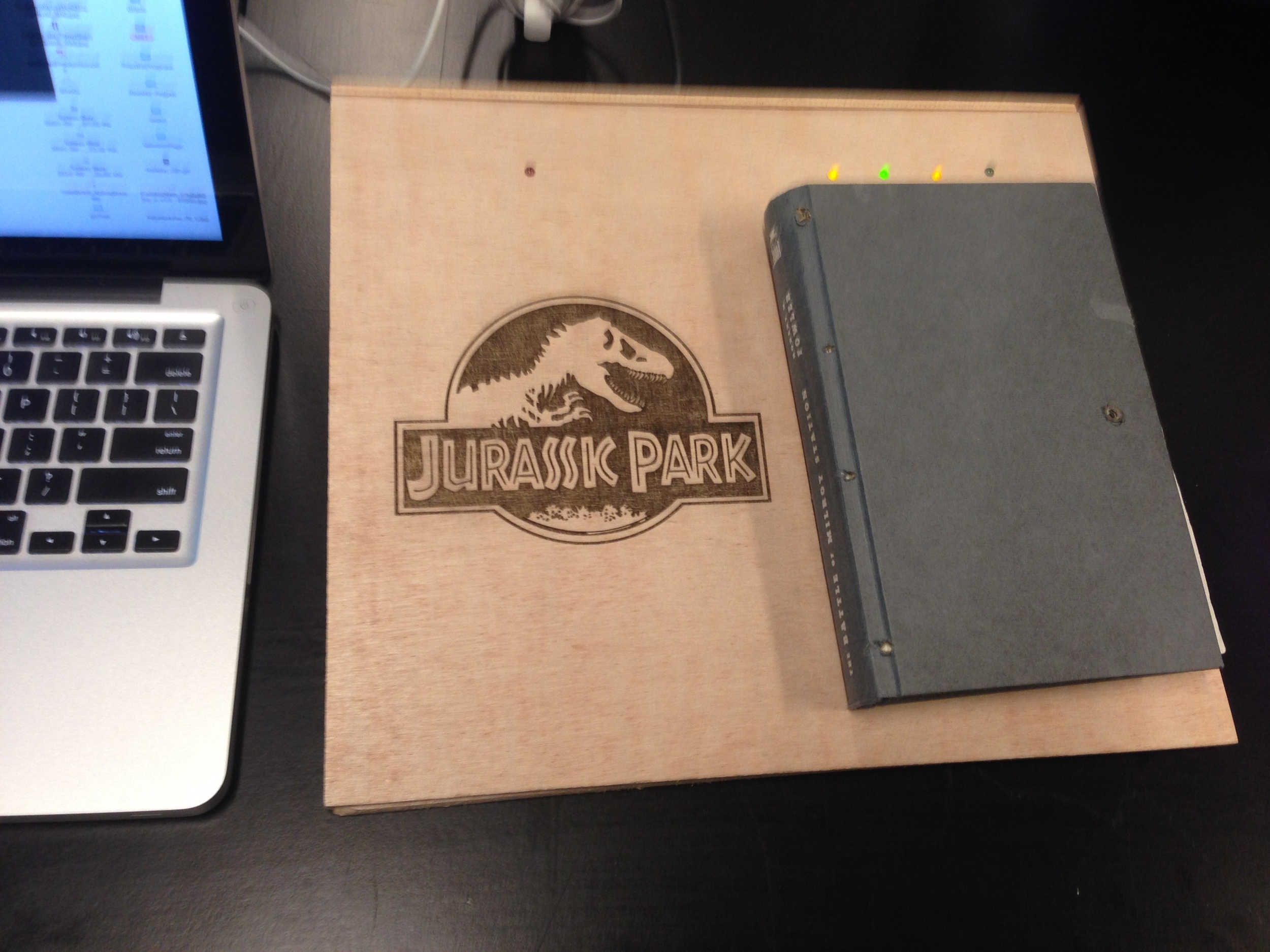

It is a physical instrument that combines two sensations - sound and light - to bring an atmospheric experience. It is made of three white paper mache lamps on a rack. When people touch a lamp, it will emit warm light and play cosmic sounds. More specifically, the light that comes out from the lamp case with many cuts creates many small shadows in the room. The volume is determined by the area of the touch, and tune depends on the length of the touch. In addition the paper mache provides a crafty texture that encourage touches from people. People could touch two or three lamps, or touch the lamps in different orders to play with the sound and also enjoy the warmth. For adults, these lamps will bring a peaceful meditative warm experience; for kids, they will increase their curiosity on light and sound.

ideation: I like projects that combines multi-channeled senses to diversify the experience. So this time I made touch as input, light and sound as output to creative a rich and consistent interaction. In addition, I have always been attracted to lights, especially in the winter time. I always try to bring warmth and comfort to people around me.

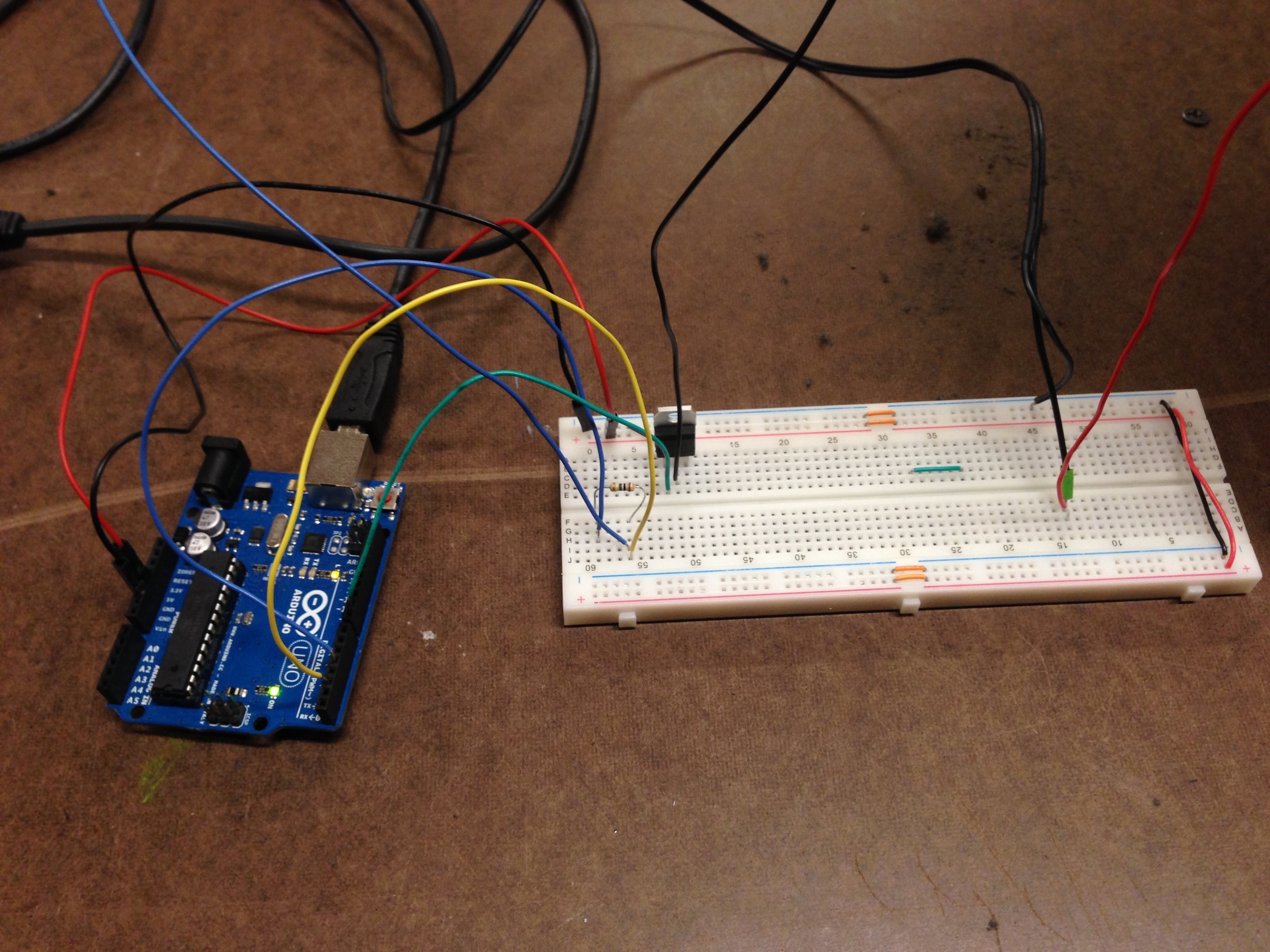

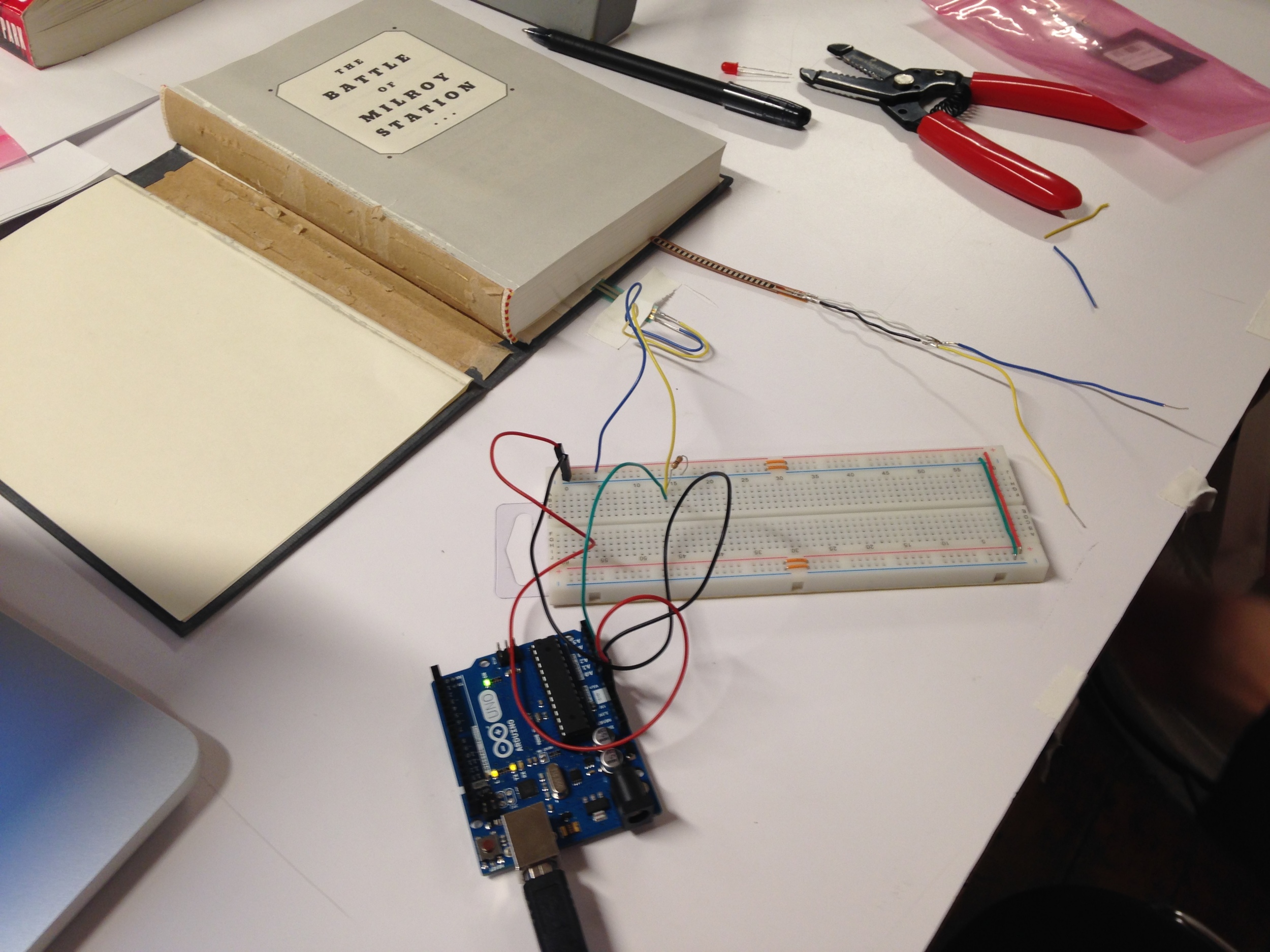

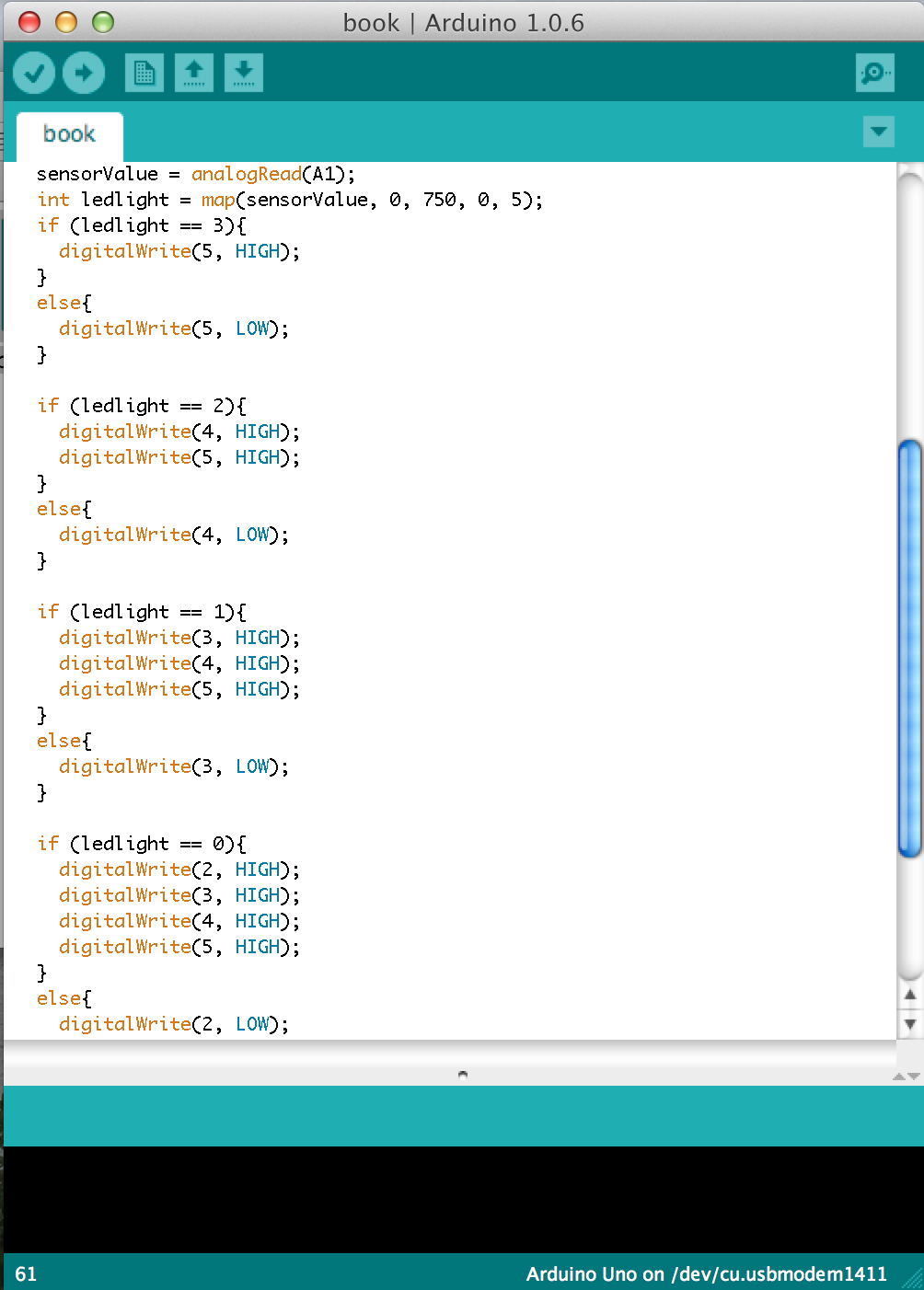

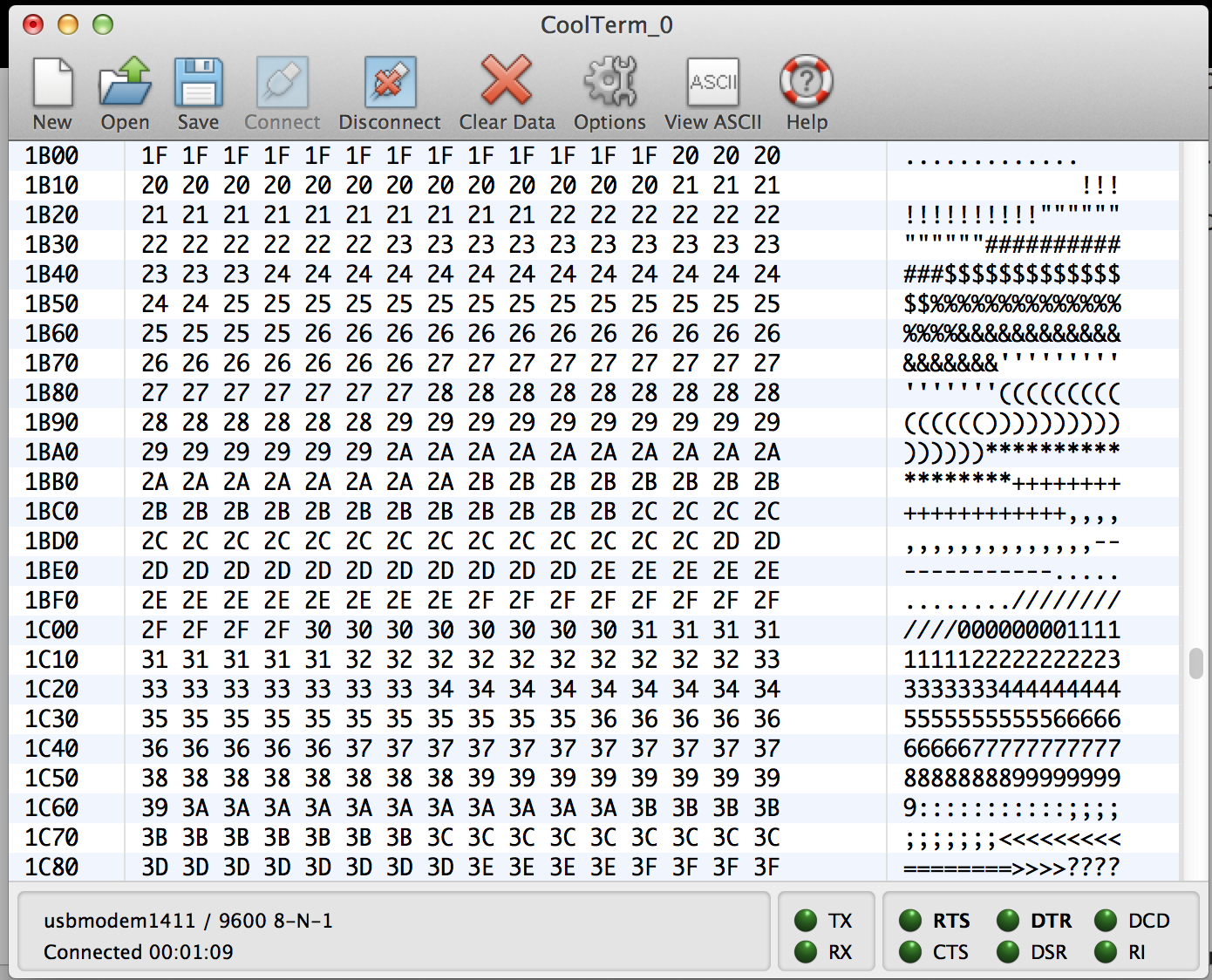

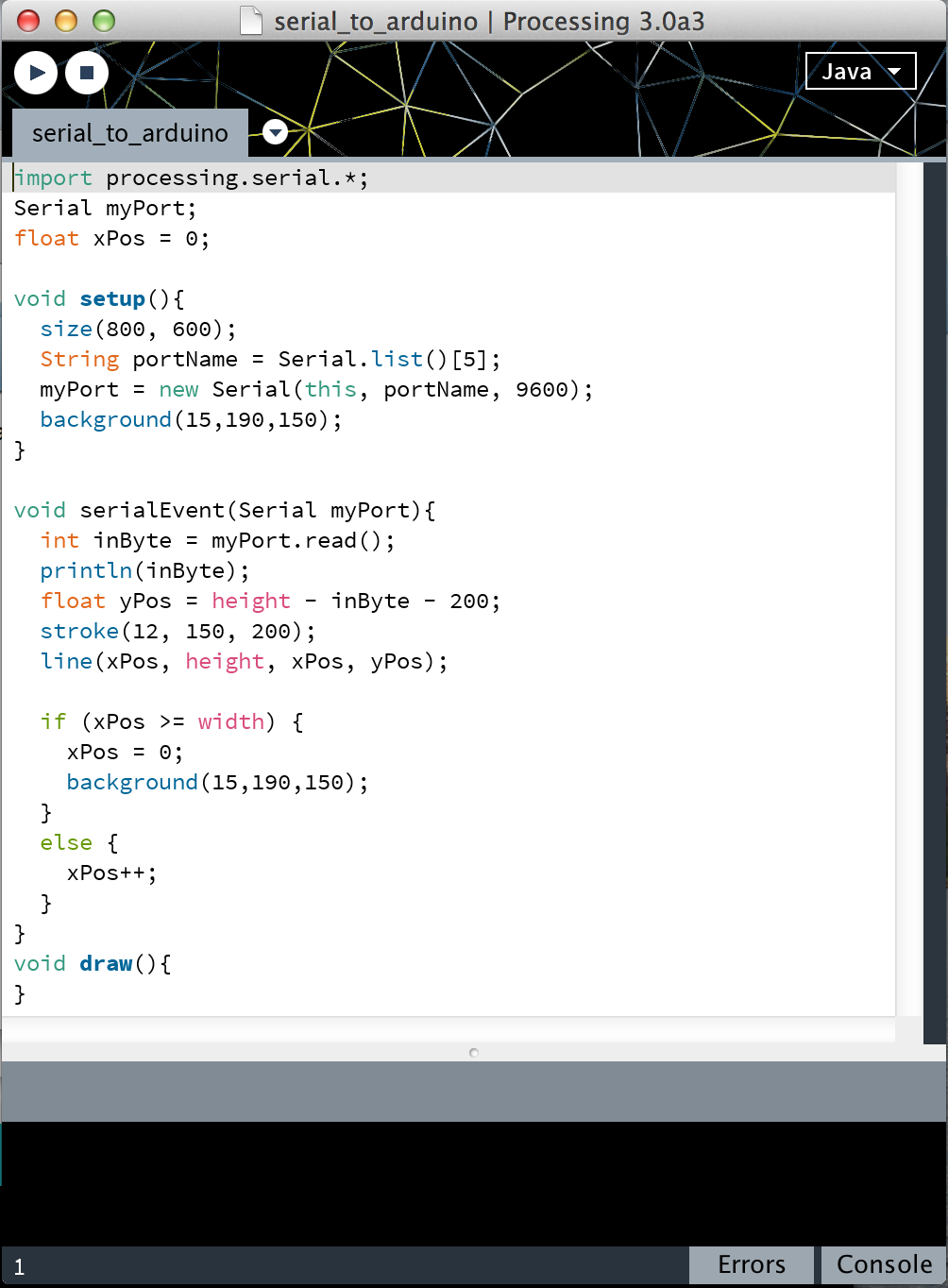

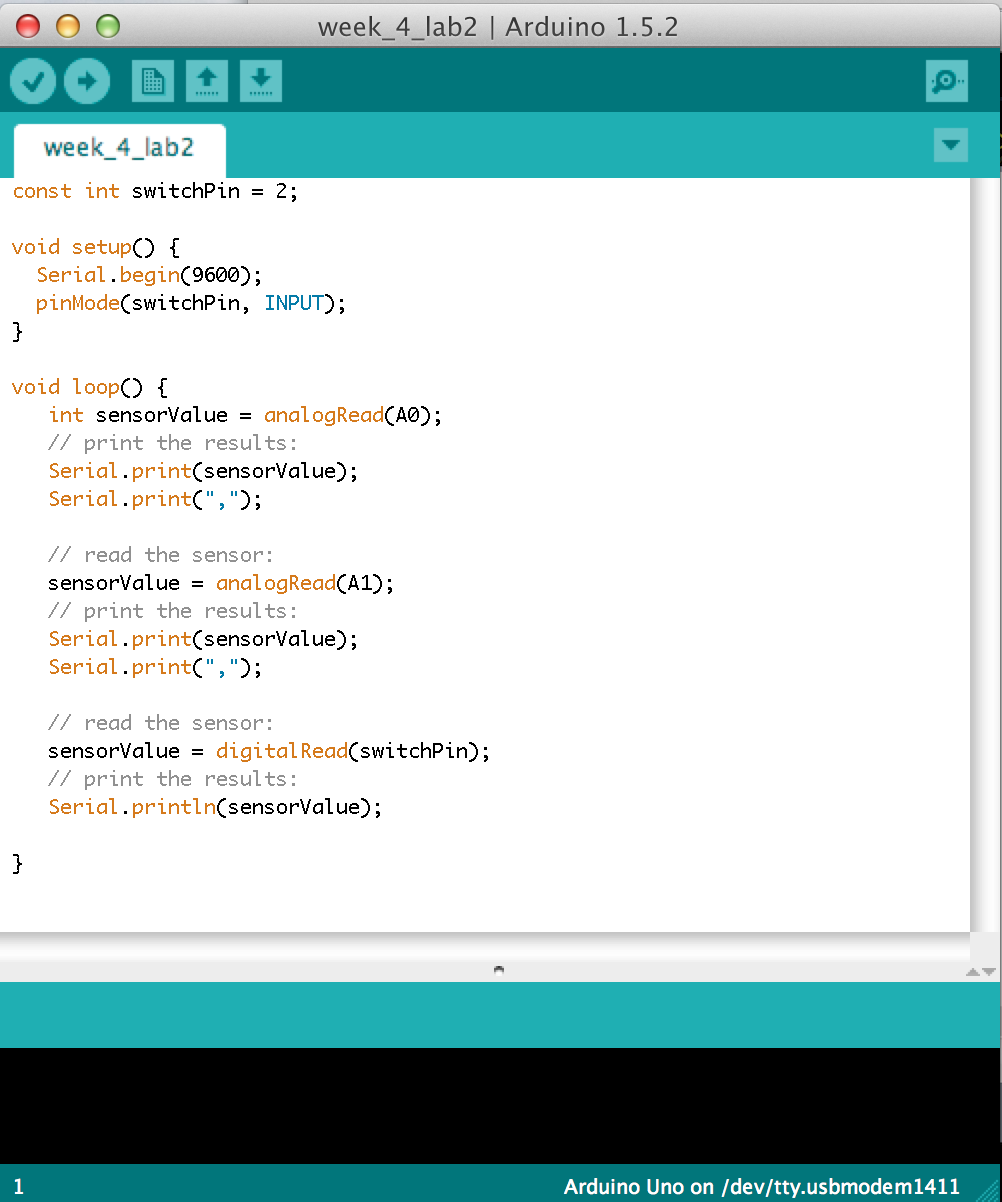

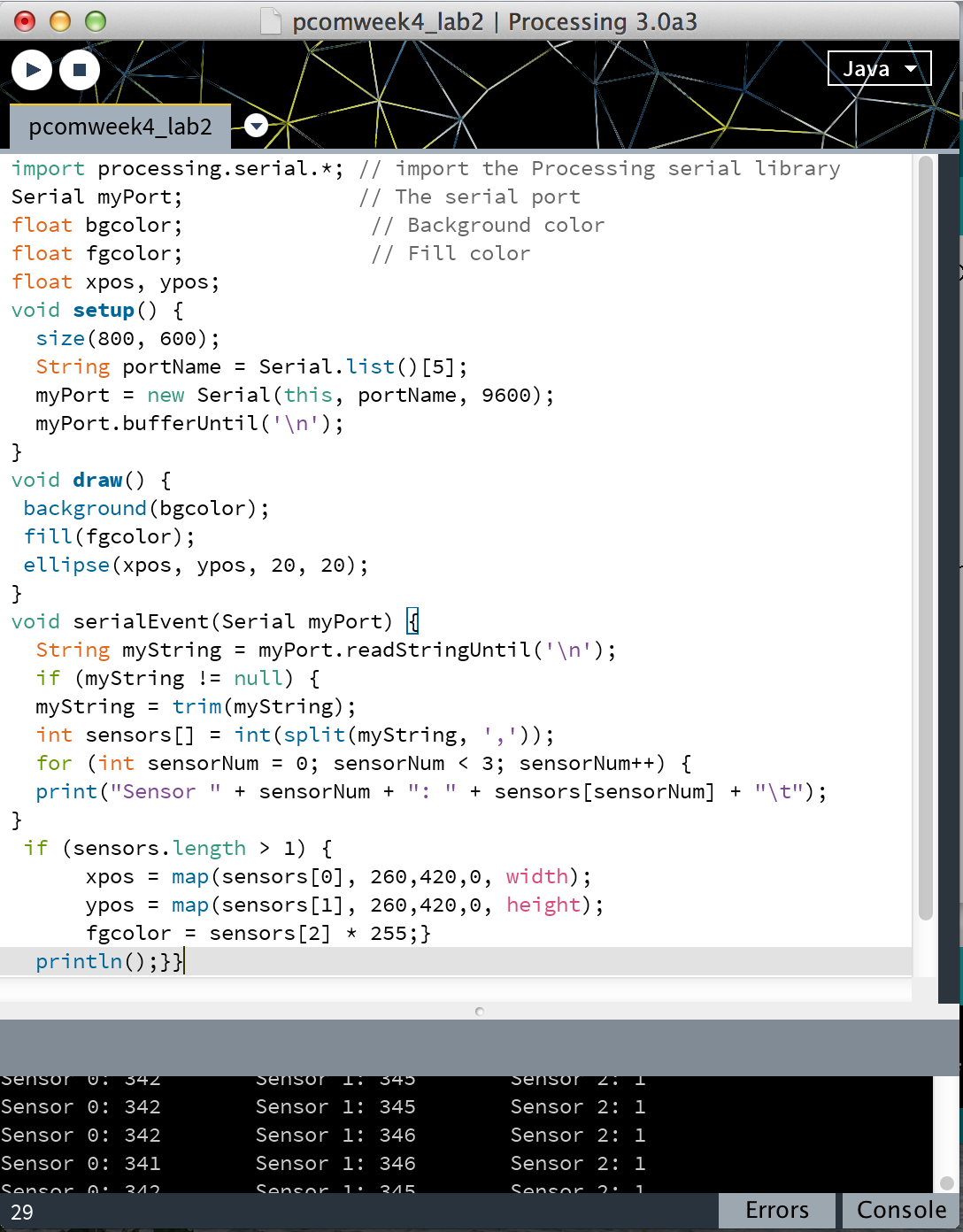

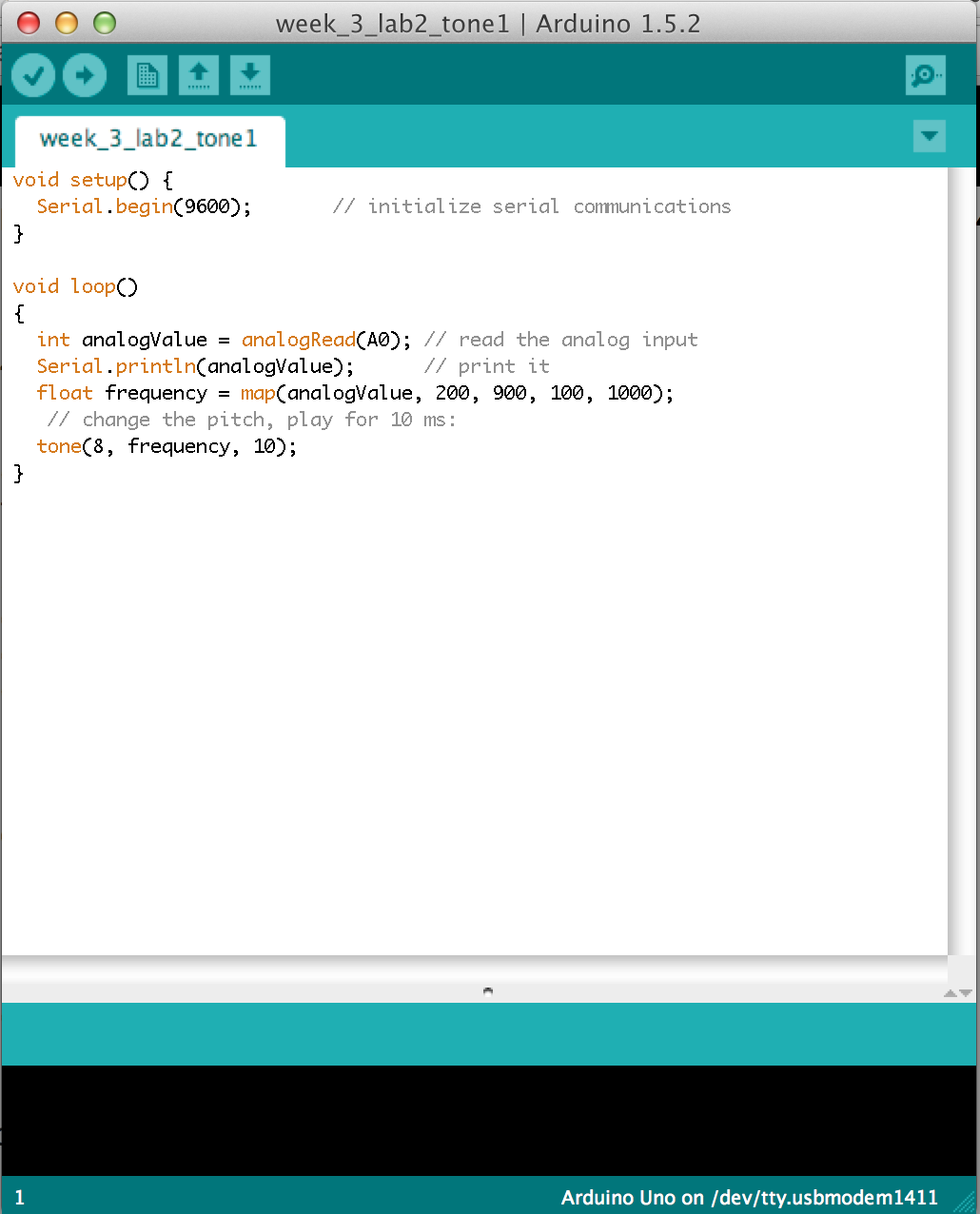

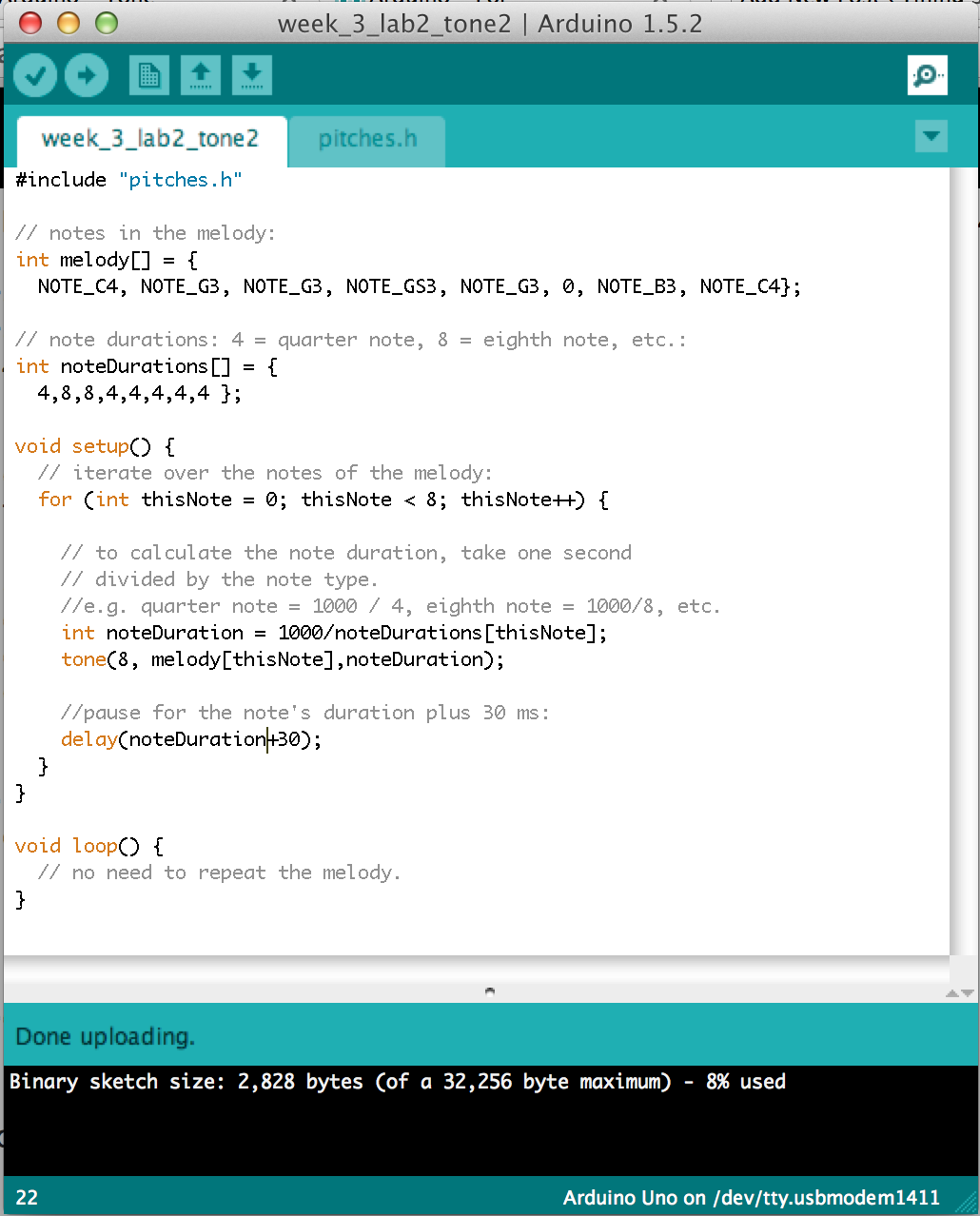

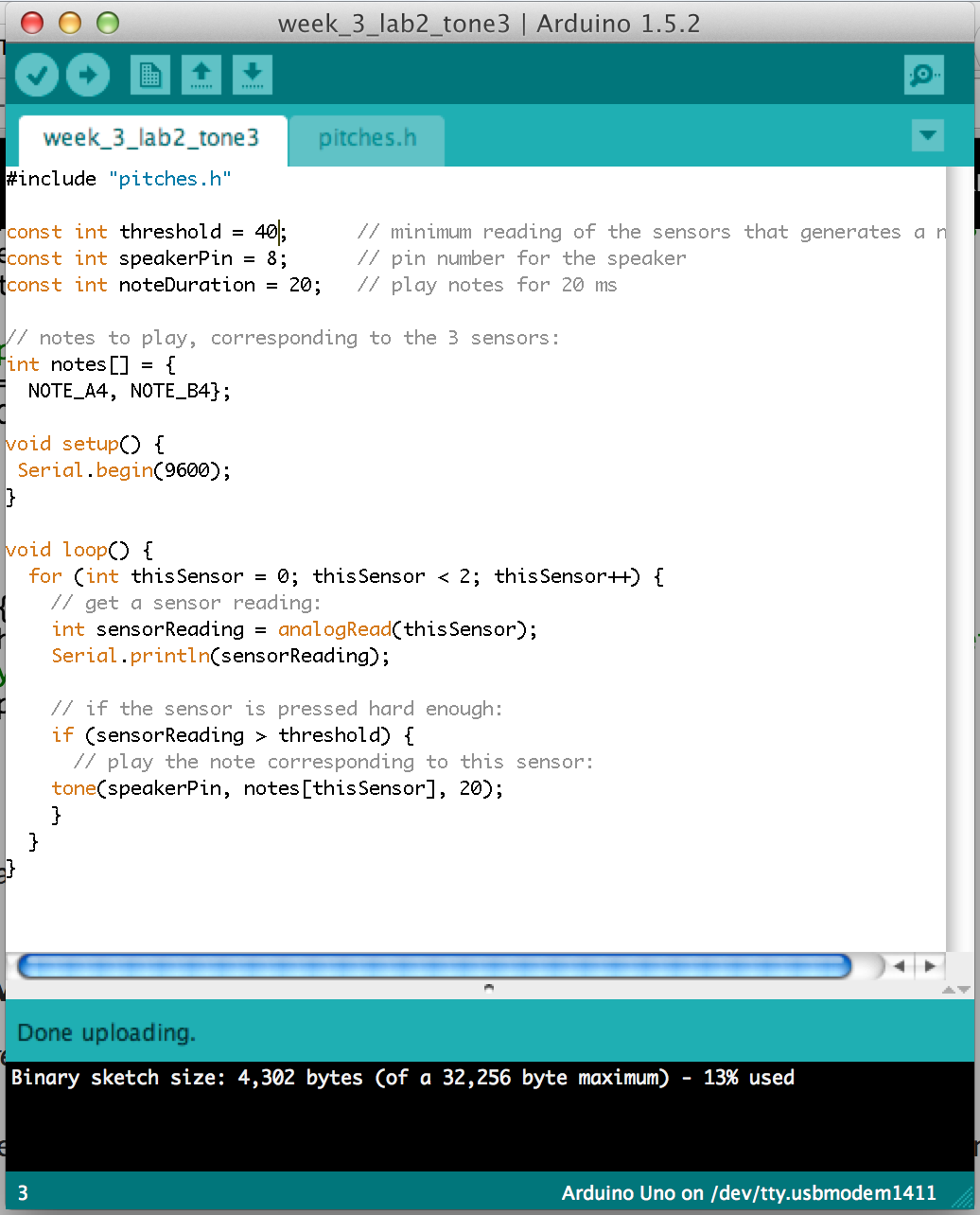

Implementation: We used MAX MSP to generate atmospheric sounds. Also we took aluminum paper, 12v lamps, 12v DC power supply, Arduino to build the circuits. In addition, we used old newspapers to build paper mache lamp case. Here's the circuits.

User test: We started with encouraging users to light the lamps by singing out loud. However, in the user test most people are not comfortable with doing so in public. Instead, they are more likely to touch and speak. Therefore, we changed our method of interaction to touching. We learned to respect the behaviors of users and create interactions that follow the trends.